Learning AI Together

Teaching and learning about AI capabilities together across 126+ open-source projects • 8.2/10 community rigor • 20+ technology stacks we're exploring

What We're Learning

We explore AI systems together through collaborative experimentation, sharing both what works and what doesn't with full transparency.

Voice-AI Problem Solving

Learning together whether conversational AI interfaces improve human-AI problem-solving across 126+ community projects. What we've found: 77% viable patterns so far.

Agentic Task Specialization

Exploring AI agent effectiveness across specialized domains using 42% JavaScript, 38% TypeScript implementations. Shared learning: improving performance with task-specific training.

Multi-Agent Coordination

Experimenting with coordination patterns as our community grows by 12% quarterly. Honest finding: coordination degrades after 4+ agents. Community rigor: 8.2/10 across 20+ technology contexts.

Our Learning Approach

How we learn and teach together about AI capabilities, sharing both successes and challenges with complete transparency.

Learning by Doing

Building real projects to test what AI can and can't do. Each project includes honest sharing of what worked, what didn't, and step-by-step documentation so others can learn from our experiences.

Collaborative Exploration

Exploring together across 17+ specialized agent types and domain areas. Learning about effectiveness boundaries, coordination patterns, and limitations through shared experimentation.

Open Sharing

Publishing everything we learn - the good, the bad, and the unexpected. All projects include replication instructions, honest limitation documentation, and complete transparency about what works and what doesn't.

Shared Learning Data

Collective learning across 126+ community projects with 8.2/10 rigor score and comprehensive documentation we all share.

- Continuous community monitoring

- Real-time shared insights

- Honest limitation sharing

Collaborative Validation

Finding 77% viable patterns across 147M+ lines of shared code with transparent success/failure documentation.

- Open limitation discussion

- Community pattern recognition

- Reproducible results anyone can verify

Multi-Technology Learning

Learning together across 42% JavaScript, 38% TypeScript implementations with improving community rigor.

- Technology-agnostic pattern sharing

- Continuous collective improvement

- Community-driven evolution

Community Projects

Open-source projects we're building and learning from together

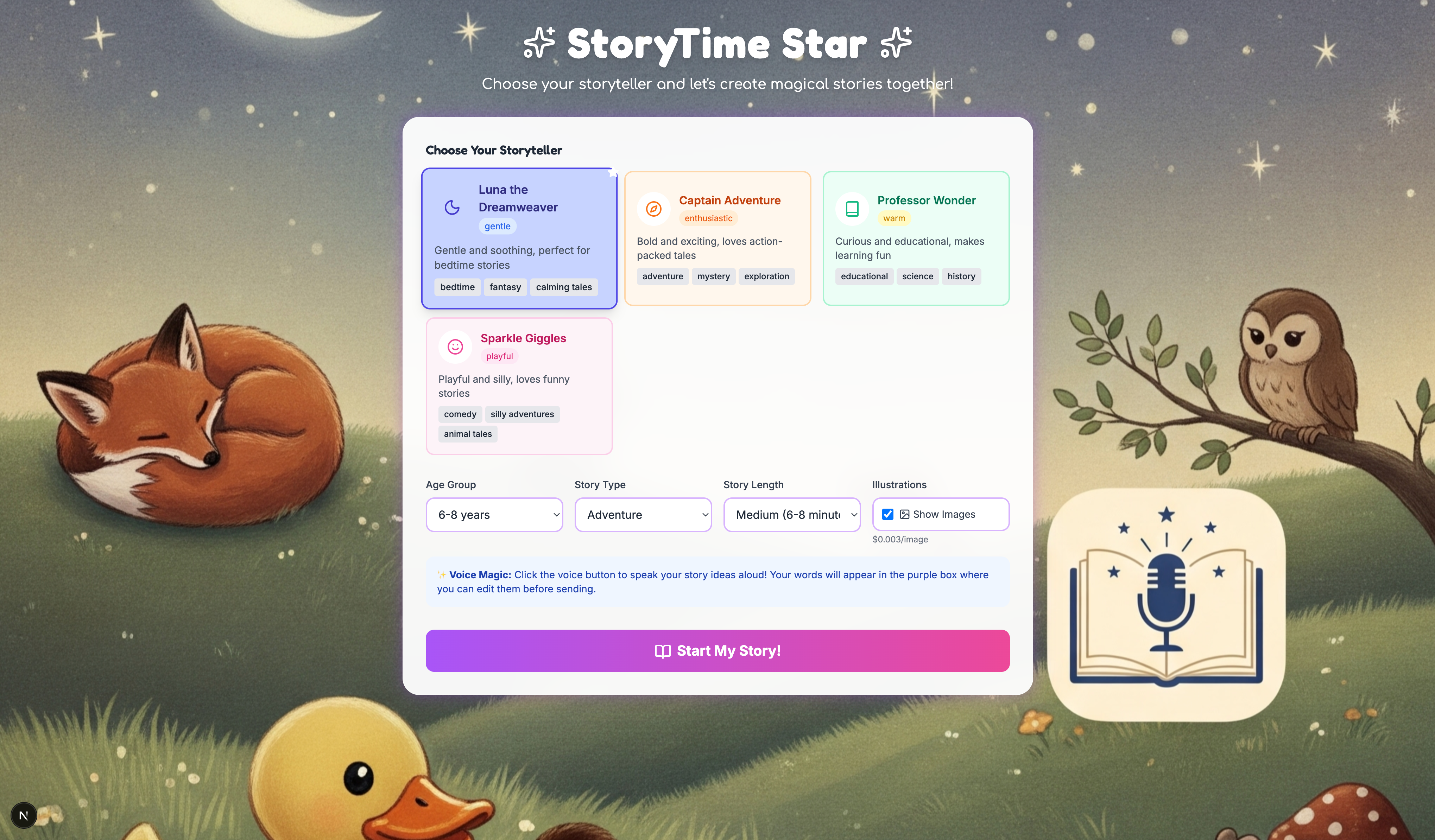

StoryTimeStar

What we're learning: AI can create engaging age-appropriate stories. What works: Voice-enabled storytelling engages kids effectively. Honest challenge: Content filtering needs human oversight, and consistency is tricky across longer narratives.

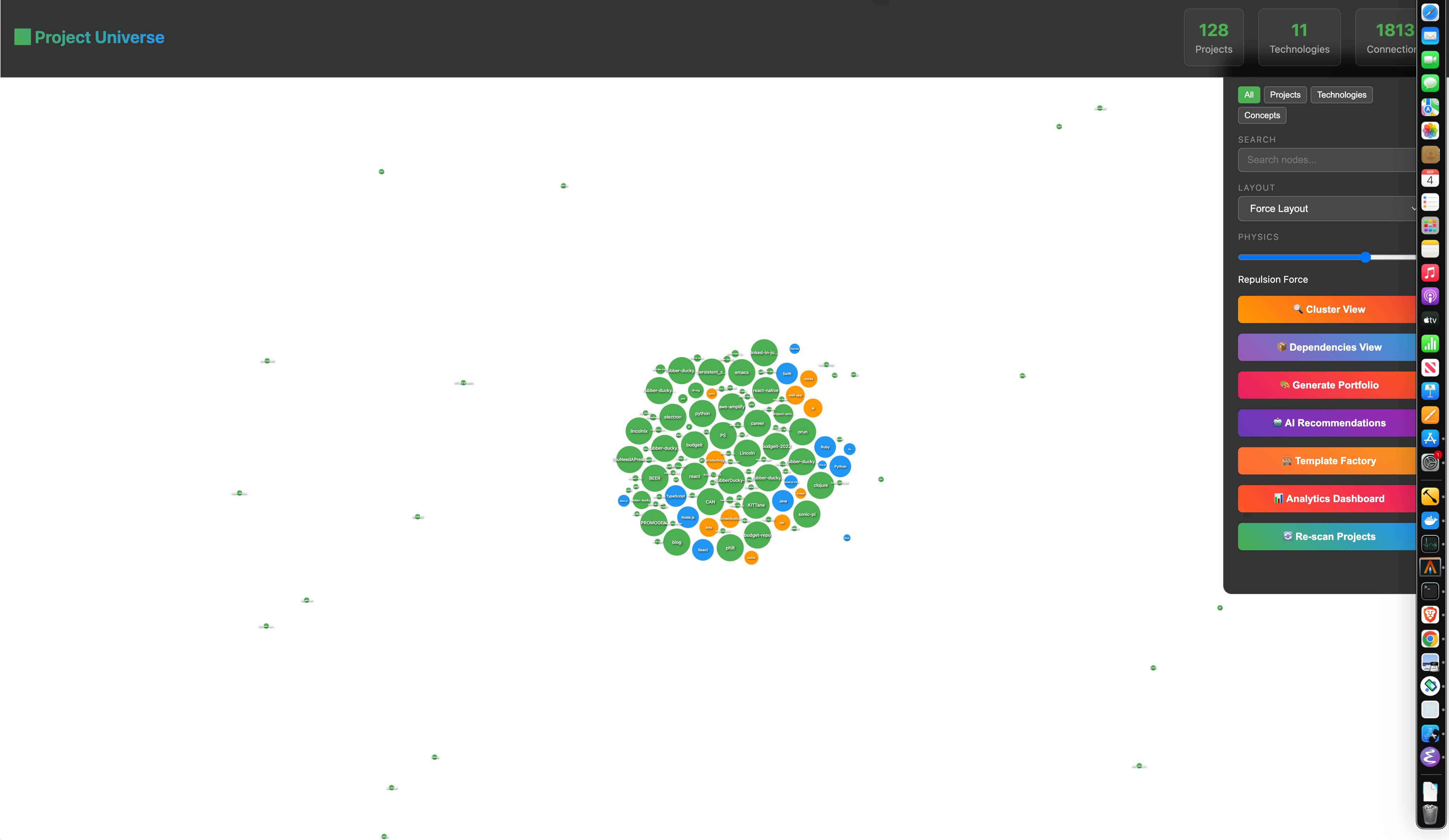

Project Universe

What we're learning: AI can spot patterns across different codebases. What works: Pattern recognition hits 77% accuracy. Honest challenge: Context switching struggles between different architectural styles.

ReplayReady Experiment

Hypothesis: AI can provide effective interview coaching. Finding: Question generation and feedback patterns show promise. Limitation: Difficulty assessing non-verbal communication and cultural context.

These are experimental implementations for research purposes. Each includes documented hypotheses, measured results, and identified limitations. Success rates and failure modes are transparently reported.

What We've Learned

Honest sharing of capabilities and limitations across 17+ specialized AI agent implementations

Development & Engineering

Effective: Code review, syntax checking, documentation generation

Limited: Complex architectural decisions, debugging non-deterministic issues

Business & Professional

Effective: Structured interview preparation, meeting facilitation

Limited: Cultural context awareness, nuanced business strategy

Content & Communication

Effective: Content structuring, summarization, creative ideation

Limited: Brand voice consistency, cultural sensitivity, original research

Specialized Services

Effective: Domain-specific factual information, structured workflows

Limited: Humor timing, contextual expertise, real-world application

Live Portfolio Metrics

Real-time insights from our active development portfolio

Loading portfolio statistics...

Loading technology distribution...

Loading health metrics...

Loading quality analysis...

Loading trend analysis...

Data Transparency

All metrics shown are aggregated from our active development portfolio. No individual project details or sensitive information is exposed. Data source: Static data

Learning Community

Join our open learning community exploring AI capabilities and limitations together. Learn, teach, and contribute to transparent collaborative development.

Community Collaboration

Want to learn and build together? We welcome community members, open-source contributors, and anyone interested in learning about AI through hands-on projects. To teach is to learn!

Community Contact: anderson@sonander.dev

Projects: Community Portfolio

Open Source: All projects and learnings shared openly

Learn Together: All projects include documentation so you can learn with us